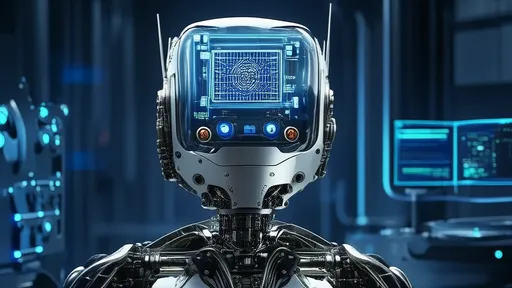

In the rapidly evolving landscape of robotics, bionic systems have emerged as a frontier where biology and engineering converge to create machines that mimic the elegance and efficiency of living organisms. The design of perception and control systems for these robots represents one of the most complex and fascinating challenges, blending insights from neuroscience, materials science, and artificial intelligence. Unlike traditional industrial robots, which operate in structured environments, bionic robots are often intended to navigate and interact with the unpredictable, dynamic real world—much like the animals they emulate. This demands a radical rethinking of how these machines sense, process, and respond to their surroundings.

At the heart of any bionic robot is its perception system, which serves as the gateway to understanding the environment. Drawing inspiration from biological sensory organs, engineers have developed advanced sensor suites that go beyond conventional cameras and lidar. For instance, artificial compound eyes, modeled after those of insects, provide wide-field views and exceptional motion detection capabilities. These systems utilize arrays of micro-lenses and photodetectors to capture visual information from multiple angles simultaneously, enabling robots to detect fast-moving objects with minimal processing latency. Similarly, bio-inspired auditory sensors replicate the directional hearing mechanisms of animals like owls or cats, using microphone arrays and sophisticated algorithms to locate sound sources accurately even in noisy environments.

Perhaps even more groundbreaking are the developments in tactile and haptic feedback systems. By integrating flexible electronic skins embedded with pressure, temperature, and texture sensors, bionic robots can "feel" their surroundings with a sensitivity approaching that of human skin. These e-skins often employ materials such as piezoelectric polymers or carbon nanotubes that generate electrical signals in response to mechanical stress. This not only allows for gentle manipulation of objects—a critical ability for robots working alongside humans—but also provides vital data for navigating complex terrains. For example, a bionic hand equipped with such sensors can adjust its grip force in real-time to prevent slipping or crushing, while a legged robot can sense ground compliance and adjust its gait accordingly.

However, sensing alone is insufficient without the computational architecture to make sense of the data. Here, the control system design takes center stage, often embracing biomimetic principles to achieve robustness and adaptability. Traditional centralized control architectures, where a single processor handles all decision-making, are often too slow and brittle for dynamic environments. Instead, many bionic robots adopt hierarchical or distributed control strategies reminiscent of nervous systems. In such setups, low-level reflexes—like withdrawing a limb upon detecting an obstacle—are handled by local processors to ensure rapid response, while higher-level planning, such as path navigation, is managed by a central unit. This decentralization reduces computational bottlenecks and enhances the robot's ability to react in real-time.

Machine learning, particularly deep reinforcement learning and spiking neural networks, plays a pivotal role in refining these control systems. By training in simulated environments that mimic real-world physics, robots can learn complex behaviors such as flying, swimming, or walking without explicit programming. Spiking neural networks, which model the asynchronous firing of biological neurons, are especially promising for low-power, event-driven processing—ideal for resource-constrained robotic platforms. These algorithms enable robots to adapt to new situations, learn from mistakes, and even develop emergent behaviors that were not explicitly coded, thereby bridging the gap between pre-programmed actions and genuine autonomy.

Another critical aspect is the integration of perception and control through sensorimotor loops. In nature, animals continuously fine-tune their movements based on sensory feedback, creating a closed-loop system that ensures precision and stability. Bionic robots replicate this through real-time data fusion from multiple sensors, coupled with adaptive controllers that adjust actuator commands on the fly. For instance, a bipedal robot uses input from gyroscopes, accelerometers, and foot pressure sensors to maintain balance while walking over uneven terrain. Advanced filtering techniques, such as Kalman or particle filters, combine these noisy sensory streams into a coherent estimate of the robot's state, enabling smooth and stable operation despite external disturbances.

Energy efficiency remains a significant hurdle, particularly for autonomous bionic robots intended for long-term deployment. Biological systems are masters of energy conservation, and mimicking this trait has led to innovations in power-aware control algorithms and energy-harvesting mechanisms. Some robots use passive dynamics—exploiting natural body mechanics like pendulum motions in legs—to reduce motor energy consumption. Others incorporate energy recovery systems that store and reuse energy from braking or descending motions, similar to regenerative braking in electric vehicles. Additionally, low-power neuromorphic processors, which emulate the brain's event-driven architecture, are being deployed to minimize computational energy draw without sacrificing performance.

Looking ahead, the future of bionic robotics perception and control will likely be shaped by advances in soft robotics, swarm intelligence, and brain-computer interfaces. Soft robots, constructed from flexible materials, can undergo large deformations and safely interact with humans and fragile objects, but they require novel sensing and control strategies to manage their continuous body dynamics. Swarm robotics takes inspiration from social insects, coordinating multiple simple robots through local interactions to accomplish complex tasks collectively—a approach that demands robust communication and distributed decision-making. Meanwhile, brain-computer interfaces might one day allow direct neural control of bionic limbs or exoskeletons, creating seamless integration between human intention and robotic action.

In conclusion, the design of perception and control systems for bionic robotics is a multidisciplinary endeavor that pushes the boundaries of what machines can achieve. By learning from millions of years of evolutionary refinement in nature, engineers are creating robots that see, hear, feel, and move with unprecedented sophistication. These systems are not merely functional; they embody a new paradigm of interaction between robots and their environments, paving the way for applications in search and rescue, medical assistance, environmental monitoring, and beyond. As research continues to blur the line between biological and artificial intelligence, the dream of creating robots that truly live and operate like living organisms moves closer to reality.

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025